Rocky the Raptor here, RPost’s cybersecurity product evangelist. Sam Altman, the guy behind ChatGPT, recently addressed several regulators and banking execs, sharing his worries about the fraud potential of AI voice-mimicking tech for the sector. He warned the Federal Reserve about an incoming wave of financial crime -- not just hypotheticals, but real fraud with real damage, real fast.

Let me break it down. Altman revealed in that gathering that there are a few powerful AI models now that can mimic someone’s voice with just a few short samples.

Think about that.

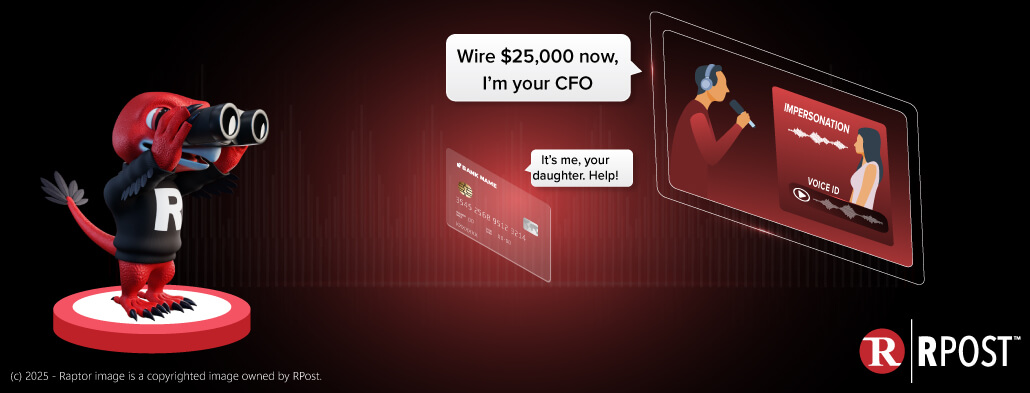

A cybercriminal scrapes a voicemail, podcast, or Zoom call. A few seconds later, they’ve cloned your voice. Next, your bank receives a call that sounds exactly like you, passing their voiceprint authentication tools easily. The cybercriminals can move money freely.

This impersonation game isn’t exactly new; the fraud crisis is already unfolding (we wrote about the AI-powered face and voice cloning -- Microsoft’s VASA-1 project a year back!). In fact, Shedeur Sanders, the famous NFL star, also became a victim of impersonation fraud recently.

For Shedeur Sanders, this impersonation was a prank and had no financial impact. But you may not be so lucky. If the CEO of an AI giant is nervous, you should be too.

Impersonations targeting YOU may be more targeted and successful at luring money from you.

So, what can you actually do about it?

Well, if you’re relying on old-school spam filters and perimeter firewalls -- nothing much. These systems were built to block malicious links, not impersonators that sound, write (now even look) exactly like your boss, your colleague, or your kid.

The good news for YOU? This is why we built RPost’s PRE-Crime™ suite, powered by RAPTOR™ AI technology.

Not to simply react to fraud attempts. But to pre-empt threats in the planning phase itself and stop them BEFORE they cause harm.

This RPost tech can:

Impersonation today is no longer about guessing passwords or phishing links. It’s about CONTEXT -- in voice, tone, message history, and urgency.

You don’t need to fear the black-hat spies. Get a white-hat AI like RAPTOR in your corner.

Try it. Simply put, it works.

February 20, 2026

.jpg)

February 13, 2026

February 06, 2026

January 30, 2026

January 23, 2026